Deep Generative Methods for predictive maintenance

VAEs and GANs

VAEs and GANsPredictive maintenance has become a key strategy in many industries, with the goal of predicting when equipment failure may occur. This proactive approach enables timely and cost-effective maintenance and prevents unexpected equipment downtime. Deep generative methods have emerged as innovative tools for almost everything, so can we use them for predictive maintenance? What are the advantages and disadvantages?

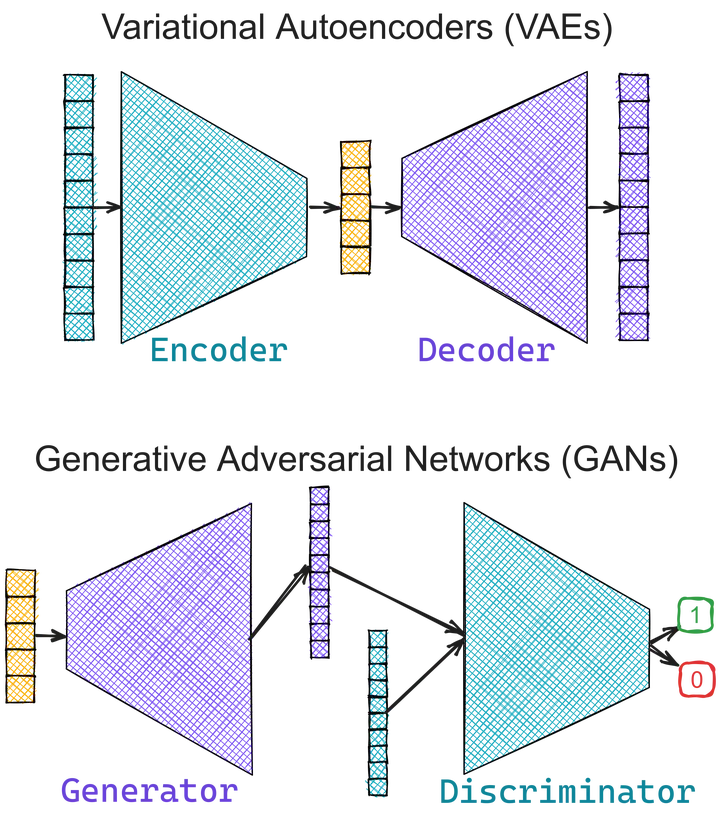

In the following, we will consider two main models: Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). These models can learn to represent complex, high-dimensional data in a lower-dimensional space, capturing the underlying structure and dependencies in the data.

Variational Autoencoders (VAEs)

VAEs are a type of generative model that can learn to encode data into a lower dimensional space and then decode it back into its original form. In the context of predictive maintenance, VAEs can be trained on normal operating data from machines. The VAE learns to recognize the “normal” state of the machine, and any significant deviation from that state can be flagged as a potential anomaly or precursor to failure.

Generative Adversarial Networks (GANs)

GANs consist of two neural networks - a generator and a discriminator - that are trained simultaneously. The generator attempts to generate data that is indistinguishable from real data, while the discriminator attempts to distinguish between real and generated data. For predictive maintenance, GANs can generate new “normal” data that can be used to improve the robustness of the predictive model.

How to apply them

VAEs are a powerful tool, using them for predictive maintenance consists of 4 steps. First, we need the data. We can collect operational data from the machines of interest, possibly during normal operation. These data form the basis for training our VAE. Second, we train your VAE on the data. The model learns to encode the data into a lower dimensional latent space and decode it back to its original form. Anomaly detection is the third step. Once the VAE is trained, you can use it to monitor the machines. The VAE will encode and decode the incoming data. If the reconstructed data significantly deviates from the original, it is likely that an anomaly has occurred. Finally, we can schedule maintenance. Based on the detected anomalies, we proactively plan servicing tasks to prevent unexpected machine downtime. GANs work very similarly, but they provide the additional possibility yo use the trained model to create more ‘normal’ data. This augmented data can be used to improve the robustness of the predictive model.

Advantages and disadvantages

Variational Autoencoders (VAEs)

| Pros | Cons |

|---|---|

| Data Efficiency: VAEs can learn a compact representation of the data. | Assumption of Normal Distribution: VAEs assume that the data is normally distributed in the latent space. |

| Anomaly Detection: VAEs can effectively detect anomalies by comparing the input data with its reconstruction. | Reconstruction Loss: VAEs might not perfectly reconstruct the input data, especially if the data is complex or noisy. |

| Interpretability: The latent space of VAEs often has interpretable dimensions. |

Generative Adversarial Networks (GANs)

| Pros | Cons |

|---|---|

| High-Quality Data Generation: GANs can generate high-quality, realistic data. | Training Difficulty: GANs can be difficult to train due to the adversarial nature of the training process. |

| Unsupervised Learning: GANs do not require labeled data for training. | Mode Collapse: GANs can suffer from a problem known as mode collapse, where the generator produces limited varieties of samples. |